Summary

AI email assistants come with hidden privacy risks. This article explains what auditors check (storage, logs, retention), outlines typical organizational mistakes, and shows how ephemeral processing solves 90% of these issues.

Artificial intelligence accelerates business communication — drafting replies, sorting messages, and generating summaries. But with every step toward automation, privacy and compliance obligations grow. Without strong governance, AI email assistants can unintentionally accumulate sensitive data and create new risks. This article examines what auditors evaluate when companies deploy such tools, the most common mistakes organizations make, and why ephemeral processing — processing without persistent storage — eliminates the majority of these risks. The examples and recommendations target SMEs and consulting firms aiming to build privacy-compliant workflows.

1. What Auditors Examine When Reviewing AI Email Assistants

When companies adopt AI-powered email assistants, auditors assess not only technical functionality but also how well these systems integrate with internal controls. Audits commonly follow frameworks such as SOC 2, SOX, ISO 27001, and increasingly the NIST Privacy Framework and NIST AI Risk Management Framework (AI RMF) in the United States. These frameworks emphasize risk-based controls, accountability, and operational visibility.

1.1 Control Design and Operating Effectiveness

Auditors differentiate between design effectiveness and operational effectiveness of controls. A guidance document by Zluri notes that auditors first determine whether a control is “logically adequate” to prevent or detect risks. This includes:

- review frequency

- qualifications of responsible staff

- completeness of system coverage

- remediation timelines

- clear escalation paths

Second, they test operating effectiveness: Was the control executed as intended? This includes sampling audit trails, reviewing tickets, confirming approvals, and reenacting deprovisioning steps. Only if both dimensions are met does a control count as effective.

These evaluation principles align with SOC 2 Trust Service Criteria and U.S. expectations under the FTC Act Section 5, which considers it a violation if organizations make deceptive statements about security or fail to maintain adequate controls.

1.2 Preferred Evidence and Logging Standards

Evidence follows a hierarchy: system-generated logs and automated reports are strongest because they are difficult to manipulate. Third-party documents or handwritten notes are weaker forms of evidence.

Auditors evaluate:

Storage locations and data flows

AI assistants often build personal knowledge bases for each user, creating new data stores (semantic indexes, user caches, vector databases). Auditors require a current end-to-end dataflow map, including “hidden and implicit storage locations.”

Access and activity logs

Systems like Microsoft Copilot generate audit logs for every interaction. These logs show:

- which resources (emails, files) the assistant accessed

- which action occurred (read, write, classify)

- which sensitivity labels were triggered

- which user initiated the action

Auditors ask how long logs are retained (Microsoft: typically 180 days), who can access them, and whether logs are immutable.

In the U.S., these logs may also become subject to eDiscovery, litigation holds, or subpoena requests under the Third-Party Doctrine — a major difference from EU practice.

Retention periods

Under GDPR — and similarly under U.S. state laws (CCPA/CPRA, CDPA, CPA, CTDPA, UCPA) — logs and email content must only be retained as long as necessary. Best practices recommend log rotation and automatic deletion.

Encryption and access control

Logs should be encrypted, access must follow least-privilege principles, and entitlements must be reviewed regularly.

Legal basis and transparency

GDPR requires explicit consent, transparency, and appropriate safeguards. In the U.S., transparency obligations derive from CCPA/CPRA, while FTC enforcement focuses on truthfulness and avoiding deceptive design patterns.

2. Typical Mistakes When Integrating U.S.-Based AI Email Tools

Many companies use U.S.-based AI services without examining their data handling, storage, or transfer practices — exposing themselves to major privacy and audit risks.

2.1 Unintended Data Storage and Shadow Archives

A significant risk is the automatic creation of personal knowledge bases. AI assistants generate transcripts, summaries, drafts, and embeddings, which are often stored in unexpected places. These artifacts can become legally discoverable “business records.”

One expert warns:

“You’re building a massive, searchable archive of each employee’s mind — and if you don’t govern it, a lawyer will ask for it eventually.”

Stored artifacts may include extended summaries that exceed the length of original content, creating retention mismatches and “shadow records.” Organizations are often unaware that the assistant is generating persistent data (notes, summaries, drafts, logs). Leadership remains liable for everything created.

In the U.S., these risks are amplified by eDiscovery obligations, litigation holds, and broad subpoena powers.

2.2 Outdated Policies and Processes

Companies frequently enable AI assistants without updating:

- usage policies

- eDiscovery workflows

- retention schedules

- acceptable use rules

Generated summaries may require longer retention than original emails. Separate notes may cause “shadow archives,” leading to audit deficiencies. Outdated dataflow diagrams are common findings in SOC 2 and ISO audits.

2.3 Overlooking Third-Party Service Providers

Many AI email tools rely on U.S. cloud providers. Organizations often audit only their internal systems and overlook third parties. U.S. privacy frameworks (CCPA/CPRA, GLBA, HIPAA BAAs) require strict vendor management and contractual controls.

Typical audit questions include:

- Does the vendor store data? How long?

- Is the data encrypted at rest and in transit?

- Which subcontractors are involved?

- Are there Data Processing Agreements (DPAs)?

- Are subprocessors independently audited?

Overlooking vendors is among the top causes of audit failures.

2.4 Documentation Gaps and Lack of Staff Training

Without documentation, controls cannot be validated. Auditors require:

- proof of entitlement changes

- proof of deletion

- proof of classification actions

- immutable system logs

Training gaps are equally problematic. Employees often do not understand:

- how AI assistants store data

- how to classify sensitive information

- how to securely prompt

- how to report incidents

This leads to human error and compliance violations.

2.5 Unclear Boundaries in Transatlantic Data Transfers

Many U.S. tools operate under the new EU–U.S. Data Privacy Framework (DPF), but uncertainties remain regarding:

- data retention duration

- access by U.S. agencies

- algorithmic reuse

- secondary processing

Some tools store interactions for 180 days — far longer than necessary. Companies must evaluate whether they accept U.S.-based preprocessing or prefer EU-native alternatives.

3. Why Ephemeral Processing Solves 90% of These Problems

Most risks arise from unnecessary data storage. Ephemeral processing means that prompts and outputs exist only temporarily in memory and are deleted immediately afterward, without persistent storage or reuse for model training.

This directly implements GDPR’s data minimization principle (Art. 5) and aligns with HIPAA, SOC 2, and CCPA/CPRA expectations.

3.1 How Ephemeral Processing Works

A research paper on Zero Data Retention in LLM-based Enterprise Assistants defines ephemeral processing as:

- prompts and a model’s output exist only in RAM

- no persistent logs of input or output exist

- no training corpus is built from user data

- no hidden caches remain

A security handbook for AI integrations lists ephemeral processing as the single most effective technical safeguard for privacy compliance. Additional safeguards include:

- secure enclaves

- encryption

- access monitoring

- masking and redaction

3.2 Benefits for Privacy and Compliance

No data accumulation

Eliminates shadow archives and reduces eDiscovery exposure.

Simplified audits

No retention plans required; no historical data to govern.

Lower liability

With no persistent data, companies reduce obligations under GDPR, HIPAA, GLBA, SOC 2, and U.S. state privacy laws.

Reduced subpoena exposure

If nothing is stored, nothing can be compelled.

3.3 Limitations and Trade-offs

Ephemeral processing does not eliminate the need for audit logs. Logs should:

- store minimal metadata only

- be encrypted

- be retained briefly

- remain locally accessible where possible

Some use cases require short-term storage, e.g., reporting. Organizations must define strict retention and access controls.

4. Practical Recommendations for SMEs and Consulting Firms

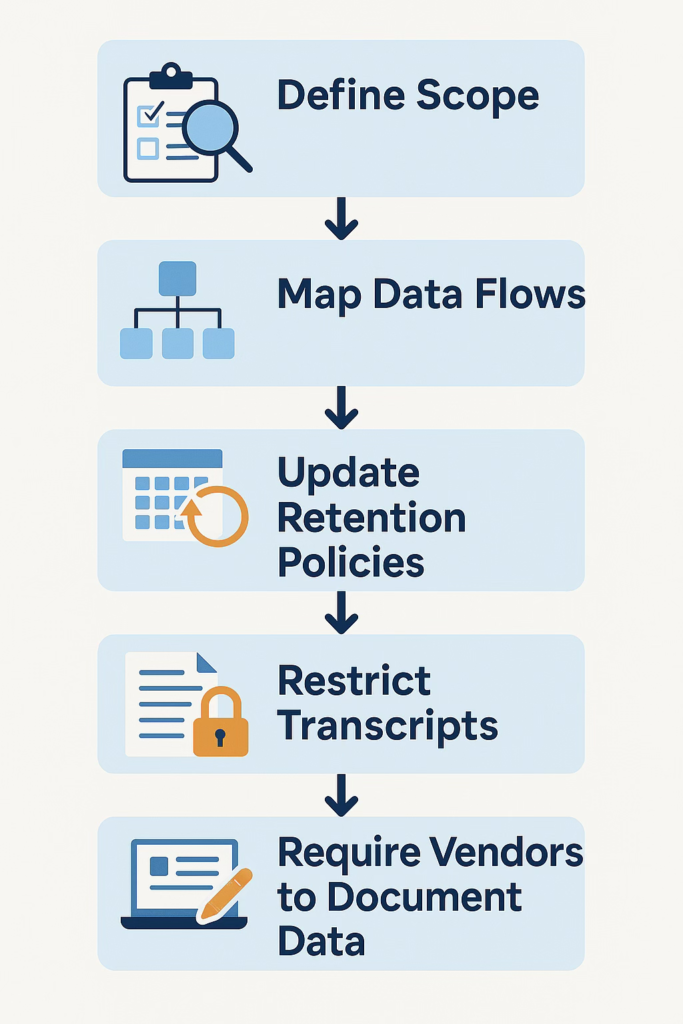

4.1 Define Scope and Map Data Flows

Before deploying AI assistants, organizations must map the full lifecycle:

- data collection

- processing

- storage

- deletion

- subprocessors

This includes hidden caches and third-party systems.

4.2 Build a Cross-Functional Audit Team

Compliance is not just IT’s responsibility. Include:

- IT

- Legal

- Data Protection

- Compliance

- Business units

This mirrors U.S. expectations under SOC 2 and NIST frameworks.

4.3 Update Policies and Processes

- revise retention and deletion policies

- automate log rotation

- revise access management

- require system-generated evidence

- avoid editable shared-drive evidence

4.4 Evaluate Third Parties and Contracts

Questions to ask:

- Does the U.S. vendor store data? For how long?

- Where is it stored? Which region?

- Is training on customer prompts used?

- Does the vendor comply with GDPR, CCPA/CPRA, HIPAA, SOC 2?

- Are DPA, BAA, SCCs, or DPF certifications in place?

4.5 Training and Awareness

Employees need training on:

- how AI assistants operate

- how data is generated

- classification of sensitive data

- avoiding PI/PHI/PCI exposure

- secure prompting habits

4.6 Implement Technical Safeguards

- enforce ephemeral processing

- enable Private Data Gateways

- encrypt logs

- minimize metadata capture

- restrict automatic transcription or summarization

4.7 Plan Continuous Monitoring and Audits

Regular internal and external audits ensure:

- policies are followed

- gaps are closed

- vendor compliance remains intact

5. Conclusion

AI email assistants improve efficiency but bring hidden risks through new data stores and complex compliance obligations. Privacy audits uncover these risks by examining storage locations, logs, retention rules, and encryption. Common mistakes — unclear scopes, outdated dataflow maps, undocumented processes — can be avoided with strong governance.

Ephemeral processing is the most powerful way to reduce risk while benefiting from AI. When data is processed only temporarily and not stored, organizations fulfill data minimization by design. Combined with clear policies and contractual safeguards, SMEs can modernize email management without compromising compliance.